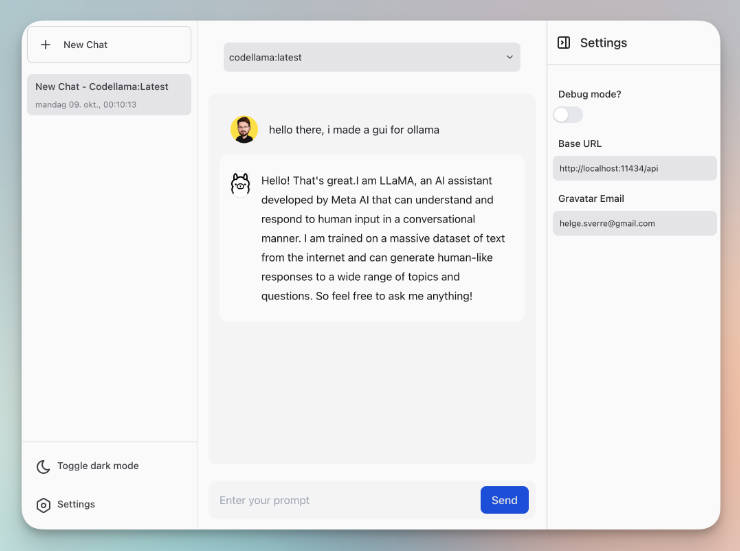

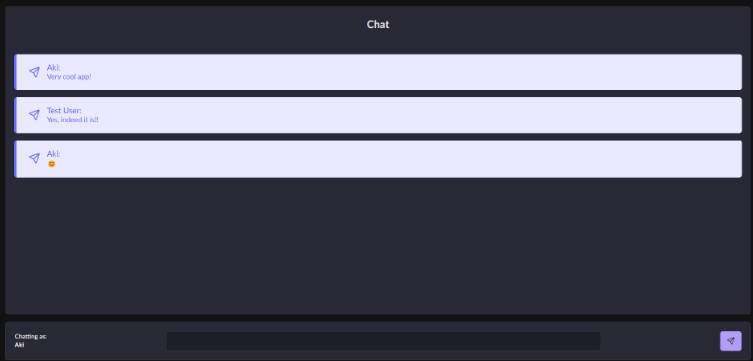

Ollama GUI: Web Interface for chatting with your local LLMs.

Ollama GUI is a web interface for ollama.ai, a tool that enables running Large Language Models (LLMs) on your local machine.

? Installation

Prerequisites

-

Download and install ollama CLI.

ollama pull <model-name> ollama serve

Getting Started

-

Clone the repository and start the development server.

git clone [email protected]:HelgeSverre/ollama-gui.git cd ollama-gui yarn install yarn dev

-

Or use the web version, by allowing the origin docs

OLLAMA_ORIGINS=https://ollama-gui.vercel.app ollama serve

? To-Do List

- Properly format newlines in the chat message (PHP-land has

nl2brbasically want the same thing) - Browse and download available models

- Store chat history using IndexedDB

- Ensure mobile responsiveness

? Built With

- Ollama.ai – CLI tool for models.

- LangUI

- Vue.js

- Vite

- Pinia

- Tailwind CSS

- VueUse

- @tabler/icons-vue

? License

Licensed under the MIT License. See the LICENSE.md file for details.