llmbinge

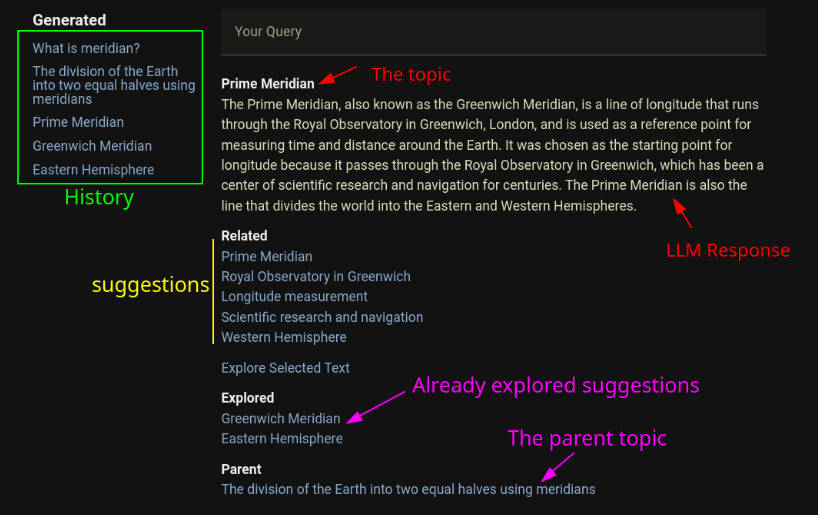

This is a simple web-app to explore ideas based on LLM, with very little typing. The basic idea is to use LLM to generate a response and also a set of suggestions for each response. We can click on those suggestions to explore the topic further.

Currently ollama is the only supported backend.

Features

- Generation of response from queries (basic)

- Generation of related queries and suggestions

- Generation based on text selection

- History management

- Parent-child relation management for topics

- Handling a set of aspects for every response (eg: people, places, etc)

Development

Use the following commands to get the app running in dev mode:

https://github.com/charstorm/llmbinge.git

cd llmbinge/llmbinge

yarn install

yarn dev

The app uses the following fixed parameters (for now):

- ollama url = “http://localhost:11434/api/generate“

- model = mistral

TODO

- configuration for model and url

- configuration for output size

- favicon for the app

- support response regeneration

- support save and reload

- markdown rendering

- equation rendering

- graphviz graph rendering

- detailed instructions on setting up the project

- release 1.0